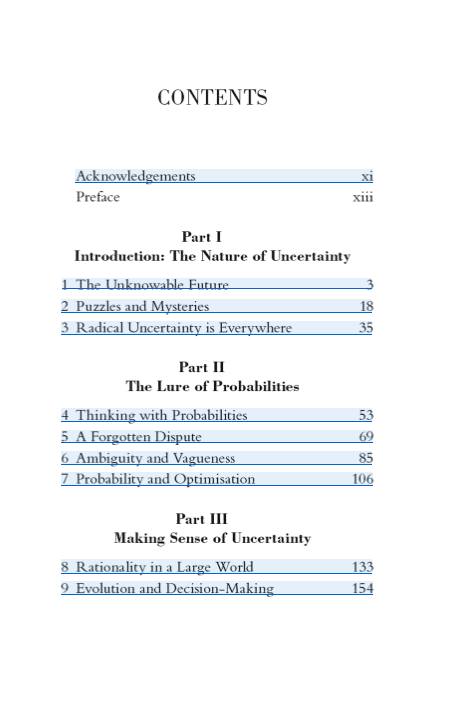

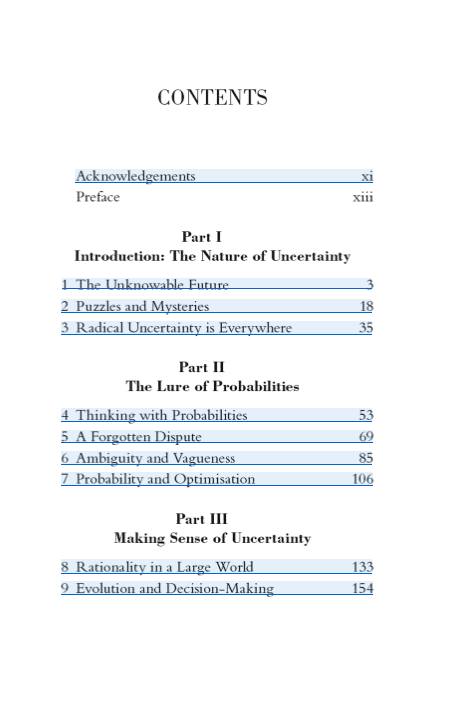

by Mervyn King and John Kay | Little, Brown. 5 Mar 2020

A podcast by the authors is available here

A critical review here: Are economists really this stupid?

A news service focusing on developments in monitoring and evaluation methods relevant to development programmes with social development objectives. Managed by Rick Davies, since 1997

by Mervyn King and John Kay | Little, Brown. 5 Mar 2020

A podcast by the authors is available here

A critical review here: Are economists really this stupid?

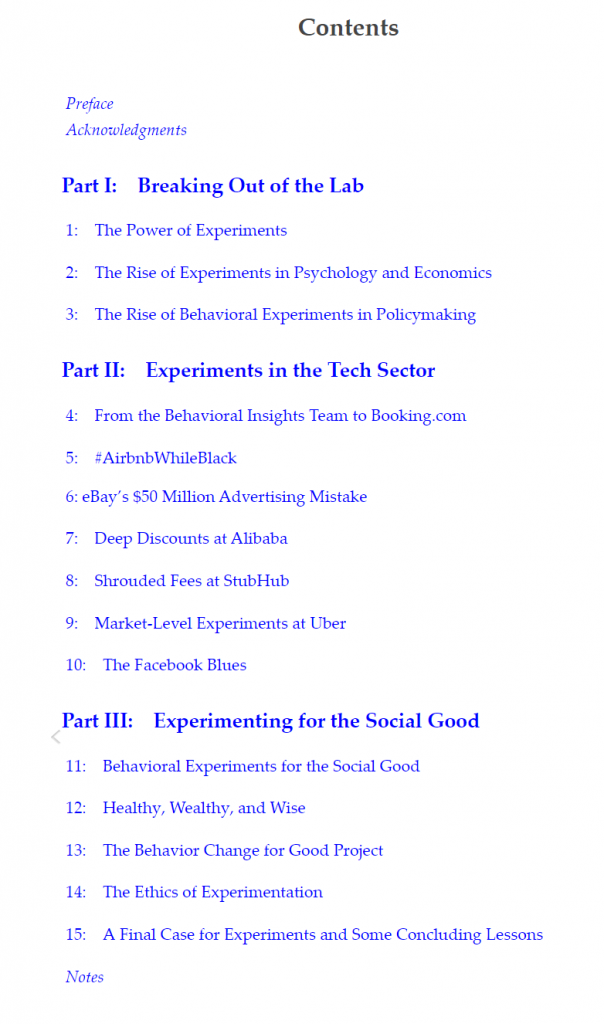

By Michael Luca and Max H. Bazerman, March 2020. Published by MIT Press

How organizations—including Google, StubHub, Airbnb, and Facebook—learn from experiments in a data-driven world.

Have you logged into Facebook recently? Searched for something on Google? Chosen a movie on Netflix? If so, you’ve probably been an unwitting participant in a variety of experiments—also known as randomized controlled trials—designed to test the impact of changes to an experience or product. Once an esoteric tool for academic research, the randomized controlled trial has gone mainstream—and is becoming an important part of the managerial toolkit. In The Power of Experiments: Decision-Making in a Data Driven World, Michael Luca and Max Bazerman explore the value of experiments and the ways in which they can improve organizational decisions. Drawing on real world experiments and case studies, Luca and Bazerman show that going by gut is no longer enough—successful leaders need frameworks for moving between data and decisions. Experiments can save companies money—eBay, for example, discovered how to cut $50 million from its yearly advertising budget without losing customers. Experiments can also bring to light something previously ignored, as when Airbnb was forced to confront rampant discrimination by its hosts. The Power of Experiments introduces readers to the topic of experimentation and the managerial challenges that surround them. Looking at experiments in the tech sector and beyond, this book offers lessons and best practices for making the most of experiments.

In The Power of Experiments: Decision-Making in a Data Driven World, Michael Luca and Max Bazerman explore the value of experiments, and the ways in which they can improve organizational decisions. Drawing on real world experiments and case studies, Luca and Bazerman show that going by gut is no longer enough—successful leaders need frameworks for moving between data and decisions. Experiments can save companies money—eBay, for example, discovered how to cut $50 million from its yearly advertising budget without losing customers. Experiments can also bring to light something previously ignored, as when Airbnb was forced to confront rampant discrimination by its hosts.

The Power of Experiments introduces readers to the topic of experimentation and the managerial challenges that surround them. Looking at experiments in the tech sector and beyond, this book offers lessons and best practices for making the most of experiments.

See also a World bank blog review by David McKenzie

WIDER Working Paper 2020/20. Richard Manning, Ian Goldman, and Gonzalo Hernández Licona. PDF copy available

Abstract: In 2006 the Center for Global Development’s report ‘When Will We Ever Learn? Improving lives through impact evaluation’ bemoaned the lack of rigorous impact evaluations. The authors of the present paper researched international organizations and countries including Mexico, Colombia, South Africa, Uganda, and Philippines to understand how impact evaluations and systematic reviews are being implemented and used, drawing out the emerging lessons. The number of impact evaluations has risen (to over 500 per year), as have those of systematic reviews and other synthesis products, such as evidence maps. However, impact evaluations are too often donor-driven, and not embedded in partner governments. The willingness of politicians and top policymakers to take evidence seriously is variable, even in a single country, and the use of evidence is not tracked well enough. We need to see impact evaluations within a broader spectrum of tools available to support policymakers, ranging from evidence maps, rapid evaluations, and rapid synthesis work, to formative/process evaluations and classic impact evaluations and systematic reviews.

Selected quotes

4.1 Adoption of IEs On the basis of our survey, we feel that real progress has been made since 2006 in the adoption of IEs to assess programmes and policies in LMICs. As shown above, this progress has not just been in terms of the number of IEs commissioned, but also in the topics covered, and in the development of a more flexible suite of IE products. There is also some evidence, though mainly anecdotal, 89 that the insistence of the IE community on rigour has had some effect both in levering up the quality of other forms of evaluation and in gaining wider acceptance that ‘before and after’ evaluations with no valid control group tell one very little about the real impact of interventions. In some countries, such as South Africa, Mexico, and Colombia, institutional arrangements have favoured the use of evaluations, including IEs, although more uptake is needed.

There is also perhaps a clearer understanding of where IE techniques can or cannot usefully be applied, or combined with other types of evaluation.

At the same time, some limitations are evident. In the first place, despite the application of IE techniques to new areas, the field remains dominated by medical trials and interventions in the social sectors. Second, even in the health sector, other types of evaluation still account for the bulk of total evaluations, whether by donor agencies or LMIC governments.

Third, despite the increase in willingness of a few LMICs to finance and commission their own IEs, the majority of IEs on policies and programmes in such countries are still financed and commissioned by donor agencies, albeit in some cases with the topics defined by the countries, such as in 3ie’s policy windows. In quite a few cases, the prime objectives of such IEs are domestic accountability and/or learning within the donor agency. We believe that greater local ownership of IEs is highly desirable. While there is much that could not have been achieved without donor finance and commissioning, our sense is that—as with other forms of evaluation—a more balanced pattern of finance and commissioning is needed if IEs are to become a more accepted part of national evidence systems.

Fourth, the vast majority of IEs in LMICs appear to have ‘northern’ principal investigators. Undoubtedly, quality and rigour are essential to IEs, but it is important that IEs should not be perceived as a supply-driven product of a limited number of high-level academic departments in, for the most part, Anglo-Saxon universities, sometimes mediated through specialist consultancy firms. Fortunately, ‘southern’ capacity is increasing, and some programmes have made significant investments in developing this. We take the view that this progress needs to be ramped up very considerably in the interests of sustainability, local institutional development, and contributing over time to the local culture of evidence.

Fifth, as pointed out in Section 2.1, the financing of IEs depends to a troubling extent on a small body of official agencies and foundations that regard IEs as extremely important products. Major shifts in policy by even a few such agencies could radically reduce the number of IEs being financed.

Finally, while IEs of individual interventions are numerous and often valuable to the programmes concerned, IEs that transform thinking about policies or broad approaches to key issues of development are less evident. The natural tools for such results are more often synthesis products than one-off IEs, and to these we now turn

4.2 Adoption of synthesis products (building body of evidence)

Systematic reviews and other meta-analyses depend on an adequate underpinning of well structured IEs, although methodological innovation is now using a more diverse set of sources. 91 The take-off of such products therefore followed the rise in the stock of IEs, and can be regarded as a further wave of the ‘evidence revolution’, as it has been described by Howard White (2019). Such products are increasingly necessary, as the evidence from individual IEs grows.

As with IEs, synthesis products have diversified from full systematic reviews to a more flexible suite of products. We noted examples from international agencies in Section 2.1 and to a lesser extent from countries in Section 3, but many more could be cited. In several cases, synthesis products seek to integrate evidence from quasi-experimental evaluations (e.g. J-PAL’s Policy Insights) or other high-quality research and evaluation evidence.

The need to understand what is now available and where the main gaps in knowledge exist has led in recent years to the burgeoning of evidence maps, pioneered by 3ie but now produced by a variety of institutions and countries. The example of the 500+ evaluations in Uganda cited earlier shows the range of evidence that already exists, which should be mapped and used before new evidence is sought. This should be a priority in all countries.

The popularity of evidence maps shows that there is now a real demand to ‘navigate’ the growing body of IE-based evidence in an efficient manner, as well as to understand the gaps that still exist. The innovation happening also in rapid synthesis shows the demand for synthesis products—but more synthesis is still needed in many sectors and, bearing in mind the expansion in IEs, should be increasingly possible.

Gusenbauer, Michael, and Neal R. Haddaway. ‘Which Academic Search Systems Are Suitable for Systematic Reviews or Meta-Analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed, and 26 Other Resources’. Research Synthesis Methods,2019.

Haddaway, Neal, and Michael Gusenbauer. 2020. ‘A Broken System – Why Literature Searching Needs a FAIR Revolution’. LSE (blog). 3 February 2020.

“….searches on Google Scholar are neither reproducible, nor transparent. Repeated searches often retrieve different results and users cannot specify detailed search queries, leaving it to the system to interpret what the user wants.

However, systematic reviews in particular need to use rigorous, scientific methods in their quest for research evidence. Searches for articles must be as objective, reproducible and transparent as possible. With systems like Google Scholar, searches are not reproducible – a central tenet of the scientific method.

Specifically, we believe there is a very real need to drastically overhaul how we discover research, driven by the same ethos as in the Open Science movement. The FAIR data principles offer an excellent set of criteria that search system providers can adapt to make their search systems more adequate for scientific search, not just for systematic searching, but also in day-to-day research discovery:

Rick Davies comment: I highly recommend using Lens.org, a search facility mentioned in the second paper above.

DellaVigna, Stefano, Devin Pope Vivalt, and Eva Vivalt. 2019. ‘Predict Science to Improve Science’. Science 366 (6464): 428–29.

Selected quotes follow:

The limited attention paid to predictions of research results stands in

contrast to a vast literature in the social sciences exploring people’s

ability to make predictions in general

We stress three main motivations for a more systematic collection of predictions of research results. 1. The nature of scientific progress. A new result builds on the consensus, or lack thereof, in an area and is often evaluated for how surprising, or not, it is. In turn, the novel result will lead to an updating of views. Yet we do not have a systematic procedure to capture the scientific views prior to a study, nor the updating that takes place afterward.

2. A second benefit of collecting predictions is that they can not only reveal when results are an important departure from expectations of the research community and improve the interpretation of research results, but they can also potentially help to mitigate publication bias. It is not uncommon for research findings to be met by claims that they are not surprising. This may be particularly true when researchers find null results, which are rarely published even when authors have used rigorous methods to answer important questions (15). However, if priors are collected before carrying out a study, the results can be compared to the average expert prediction, rather than to the null hypothesis of no effect. This would allow researchers to confirm that some results were unexpected, potentially making them more interesting and informative because they indicate rejection of a prior held by the research community; this could contribute to alleviating publication bias against null results.

3. A third benefit of collecting predictions systematically is that it makes it possible to improve the accuracy of predictions. In turn, this may help with experimental design. For example, envision a behavioral research team consulted to help a city recruit a more diverse police department. The team has a dozen ideas for reaching out to minority applicants, but the sample size allows for only three treatments to be tested with adequate statistical power. Fortunately, the team has recorded forecasts for several years, keeping track of predictive accuracy, and they have learned that they can combine team members’ predictions, giving more weight to “superforecasters” (9). Informed by its longitudinal data on forecasts, the team can elicit predictions for each potential project and weed out those interventions judged to have a low chance of success or focus on those interventions with a higher value of information. In addition, the research results of those projects that did go forward would be more impactful if accompanied by predictions that allow better interpretation of results in light of the conventional wisdom.

Rick Davies comment: I have argued, for years, that evaluators should start by eliciting client, and other stakeholders, predictions of outcomes of interest that the evaluation might uncover (e.g. Bangladesh, 2004). But I can’t think of any instance where my efforts have been successful, yet. But I have an upcoming opportunity and will try once again, perhaps armed with these two papers.

See also Stefano DellaVigna, and Devin Pope. 2016.‘Predicting Experimental Results: Who Knows What?’ NATIONAL BUREAU OF ECONOMIC RESEARCH.

ABSTRACT

Academic experts frequently recommend policies and treatments. But how well do they anticipate the impact of different treatments? And how do their predictions compare to the predictions of non-experts? We analyze how 208 experts forecast the results of 15 treatments involving monetary and non-monetary motivators in a real-effort task. We compare these forecasts to those made by PhD students and non-experts: undergraduates, MBAs, and an online sample. We document seven main results. First, the average forecast of experts predicts quite well the experimental results. Second, there is a strong wisdom-of-crowds effect: the average forecast outperforms 96 per cent of individual forecasts. Third, correlates of expertise—citations, academic rank, field, and contextual experience–do not improve forecasting accuracy. Fourth, experts as a group do better than non-experts, but not if accuracy is defined as rank-ordering treatments. Fifth, measures of effort, confidence, and revealed ability are predictive of forecast accuracy to some extent, especially for non-experts. Sixth, using these measures we identify `superforecasters’ among the non-experts who outperform the experts out of sample. Seventh, we document that these results on forecasting accuracy surprise the forecasters themselves. We present a simple model that organizes several of these results and we stress the implications for the collection of forecasts of future experimental results.

See also: The Social Science Prediction Platform, developed by the same authors.

Twitter responses to this post:

Howard White@HowardNWhite Ask decision-makers what they expect research findings to be before you conduct the research to help assess the impact of the research. Thanks to @MandE_NEWS for the pointer. https://socialscienceprediction.org

Marc Winokur@marc_winokur Replying to @HowardNWhite and @MandE_NEWS For our RCT of DR in CO, the child welfare decision makers expected a “no harm” finding for safety, while other stakeholders expected kids to be less safe. When we found no difference in safety outcomes, but improvements in family engagement, the research impact was more accepted

By Matthew Hutson, January 9, 2020 The New Yorker. Available online

This article is well worth reading because it deals with many measurement and analysis issues that evaluators should be very familiar with.

Selected quotes follow:

Criminologists sometimes describe crime as a “chaotic system,” and countless factors contribute to it.

The first problem with understanding crime is that measuring it is harder than it sounds.

Even if we had perfect measurements of crime rates, we’d need to sort correlation from causation.

The passage of time makes it especially difficult to sort correlation from causation. Statisticians are always on the lookout for a phenomenon they call “regression toward the mean

Mechanisms matter, too: in addition to knowing that something works, we want to know why it works.

In some cases, decades must pass before the effects of an intervention become visible

In some cases, findings reverse, then reverse again.

Beyond measuring crime and determining its causes, a third difficulty lies in predicting the effects of interventions. Peter Grabosky, a political scientist, wrote that “the tendency to overgeneralize” might be “the most common pitfall” in the study of anti-crime interventions: “

The converse is also true: crime isn’t a purely local phenomenon, and interventions in one place may affect criminal behavior in another.

Sampson thinks that criminologists should spend less time trying to figure out what causes crime—in many cases, it’s an impossible task—and turn, instead, to investigating the effects of law-enforcement policies

Criminologists may disagree on questions of causality, but they agree that outsiders underestimate the complexity of criminology. “Everyone thinks they know what causes crime,” Sampson told me. Lauritsen concurred: “Everybody has a strong view that some factor is responsible, whether it’s video games, bad music, or sexist attitudes,” she said. Kleiman complained about the “very primitive models people have in their heads” when it comes to crime: “Most of those models imply that more severity of punishment is better, which is almost certainly false.” He went on, “Anyone who hasn’t studied this professionally has more confidence than they ought to have. You have to really look at it hard to see how confusing it is.”

Criminologists face a problem that’s common in many fields: overdetermination. Why does someone commit a crime? Was it peer pressure, poverty, a broken family, broken windows, bad genes, bad parenting, under-policing, leaded gasoline, Judas Priest? “You could just keep stepping back and back and back and back, and you wonder when, ultimately, you’re going to draw a line,” Lauritsen told me. “It could be drawn at probably thousands of points.” Criminologists aren’t the only researchers who study overdetermined subjects: biologists, who have long sought specific genes for diseases, have come to realize that many traits and illnesses may be “omnigenic”—determined by countless genes. The sociologist David Matza summed up the difficulty, in 1964: “When factors become too numerous, we are in the hopeless position of arguing that everything matters.”

Still, it’s human nature to prefer comprehensible stories to uninterpretable complexity.

Perhaps, whenever someone offers up an especially compelling explanation for a rise or fall in crime, we should be wary. We might recognize that criminology—a field with direct bearing on many charged issues—is also slow and confounding, with answers that may come decades late or not at all. The moral and social complexity of crime makes simple accounts of it all the more appealing. In hearing an explanation for its rise or fall, we might ask: What kind of story is its bearer trying to tell?

22 January 2020. Aimed at researchers, but equally relevant to evaluators. Quoted in full below, available online here. Bold highlighting is mine

Every research paper tells a story, but the pressure to provide ‘clean’ narratives is harmful to the scientific endeavour. Research manuscripts provide an account of how their authors addressed a research question or questions, the means they used to do so, what they found and how the work (dis)confirms existing hypotheses or generates new ones. The current research culture is characterized by significant pressure to present research projects as conclusive narratives that leave no room for ambiguity or for conflicting or inconclusive results. The pressure to produce such clean narratives, however, represents a significant threat to validity and runs counter to the reality of what science looks like.

Prioritizing conclusive over transparent research narratives incentivizes a host of questionable research practices: hypothesizing after the results are known, selectively reporting only those outcomes that confirm the original predictions or excluding from the research report studies that provide contradictory or messy results. Each of these practices damages credibility and presents a distorted picture of the research that prevents cumulative knowledge.

During peer review, reviewers may occasionally suggest that the authors ‘reframe’ the reported work. While this is not problematic for exploratory research, it is inappropriate for confirmatory research—that is, research that tests pre-existing hypotheses. Altering the hypotheses or predictions of confirmatory research after the fact invalidates inference and renders the research fundamentally unreliable. Although these reframing suggestions are made in good faith, we will always overrule them, asking authors to present their hypotheses and predictions as originally intended.

Preregistration is being increasingly adopted across different fields as a means of preventing questionable research practices and increasing transparency. As a journal, we strongly support the preregistration of confirmatory research (and currently mandate registration for clinical trials). However, preregistration has little value if authors fail to abide by it or do not transparently report whether their project differs from what they preregistered and why. We ask that authors provide links to their preregistrations, specify the date of preregistration and transparently report any deviations from the original protocol in their manuscripts.

There is occasionally valid reason to deviate from the preregistered protocol, especially if that protocol did not have the benefit of peer review before the authors carried out their research (as in Registered Reports). For instance, it sometimes becomes apparent during peer review that a preregistered analysis is inappropriate or suboptimal. For all deviations from the preregistered protocol, we ask authors to indicate in their manuscripts how they deviated from their original plan and explain their reason for doing so (e.g., flaw, suboptimality, etc.). To ensure transparency, unless a preregistered analysis plan is unquestionably flawed, we ask that authors also report the results of their preregistered analyses alongside the new analyses.

Occasionally, authors may be tempted to drop a study from their report for reasons other than poor quality (or reviewers may make that recommendation)—for instance, because the results are incompatible with other studies reported in the paper. We discourage this practice; in multistudy research papers, we ask that authors report all of the work they carried out, regardless of outcome. Authors may speculate as to why some of their work failed to confirm their hypotheses and need to appropriately caveat their conclusions, but dropping studies simply exacerbates the file-drawer problem and presents the conclusions of research as more definitive than they are.

No research project is perfect; there are always limitations that also need to be transparently reported. In 2019, we made it a requirement that all our research papers include a limitations section, in which authors explain methodological and other shortcomings and explicitly acknowledge alternative interpretations of their findings.

Science is messy, and the results of research rarely conform fully to plan or expectation. ‘Clean’ narratives are an artefact of inappropriate pressures and the culture they have generated. We strongly support authors in their efforts to be transparent about what they did and what they found, and we commit to publishing work that is robust, transparent and appropriately presented, even if it does not yield ‘clean’ narratives.?

Published online: 21 January 2020 htthttps://doi.org/10.1038/s41562-020-0818-9

Conservation Letters, February, 2019. Katie Moon, Angela M. Guerrero, Vanessa. M. Adams, Duan Biggs, Deborah A. Blackman, Luke Craven, Helen Dickinson, Helen Ross

https://conbio.onlinelibrary.wiley.com/doi/epdf/10.1111/conl.12642

Abstract: Conservation practice requires an understanding of complex social-ecological processes of a system and the different meanings and values that people attach to them. Mental models research offers a suite of methods that can be used to reveal these understandings and how they might affect conservation outcomes. Mental models are representations in people’s minds of how parts of the world work. We seek to demonstrate their value to conservation and assist practitioners and researchers in navigating the choices of methods available to elicit them. We begin by explaining some of the dominant applications of mental models in conservation: revealing individual assumptions about a system, developing a stakeholder-based model of the system, and creating a shared pathway to conservation. We then provide a framework to “walkthrough” the stepwise decisions in mental models research, with a focus on diagram-based methods. Finally, we discuss some of the limitations of mental models research and application that are important to consider. This work extends the use of mental models research in improving our ability to understand social-ecological systems, creating a powerful set of tools to inform and shape conservation initiatives.

Our paper aims to assist researchers and practitioners to navigate the choices available in mental models research methods. The paper is structured into three sections. The first section explores some of the dominant applications and thus value of mental models for conservation research and practice. The second section provides a “walk through” of the step-wise decisions that can be useful when engaging in mental models research, with a focus on diagram-based methods. We present a framework to assist in this “walk through,” which adopts a pragmatist perspective. This perspective focuses on the most appropriate strategies to understand and resolve problems, rather than holding to a firm philosophical position (e.g., Sil & Katzenstein, 2010). The third section discusses some of the limitations of mental models research and application.

1 INTRODUCTION

2 THE ROLE FOR MENTAL MODELS I N CO N S E RVAT I O N

2.1 Revealing individual assumptions about a system

2 .2 Developing a stakeholder-based model of the system

2.3 Creating a shared pathway to conservation

3 THE TYPE OF MENTAL MODEL NEEDED

4 ELICITING OR DEVELOPING CONCEPTS AND OBJECTS

5 MODELING RELATIONSHIPS WITHIN MENTAL MODELS

5.1 Mapping qualitative relationships

5.2 Quantifying qualitative relationships

5.3 Analyzing systems based on mental models

6 COMPARING MENTAL MODELS

7 LIMITATIONS OF MENTAL MODELS RESEARCH FOR CONSERVATION POLICY AND PRACTICE

8 ADVANCING MENTAL MODELS FOR CONSERVAT I ON

“From the speed of global warming to the likelihood of developing cancer, we must grasp uncertainty to understand the world. Here’s how to know your unknowns” By Anne Marthe van der Bles, New Scientist, 3rd July 2019

The above reminds me of the philosophers’ demands in The Hitchhikers Guide to the Galaxy: “We demand rigidly defined areas of doubt and uncertainty!” The philosophers were representatives of the Amalgamated Union of Philosophers, Sages, Luminaries and Other Thinking Persons and they wanted the universe’s second-best computer (Deep Thought) turned off, because of a demarcation dispute/. It turns out, according to the above paper, that their demands were not so unreasonable after all :-)

Errors arising from too much or too little control can be seen or unseen. When control is too little, errors are more likely to be seen. People do things they should not have done. When control is too much, errors are likely to be unseen, people don’t do things they should have done. Given this asymmetry, and other things being equal, there is a bias towards too much control

Honig, Dan. 2018. Navigation by Judgment: Why and When Top Down Management of Foreign Aid Doesn’t Work. Oxford, New York: Oxford University Press.

Contents

Preface

Acknowledgments

Part I: The What, Why, and When of Navigation by Judgment

Chapter 1. Introduction – The Management of Foreign Aid

Chapter 2. When to Let Go: The Costs and Benefits of Navigation by Judgment

Chapter 3. Agents – Who Does the Judging?

Chapter 4. Authorizing Environments & the Perils of Legitimacy Seeking

Part II: How Does Navigation by Judgment Fare in Practice?

Chapter 5. How to Know What Works Better, When: Data, Methods, and Empirical Operationalization

Chapter 6. Journey Without Maps – Environmental Unpredictability and Navigation Strategy

Chapter 7. Tailoring Management to Suit the Task – Project Verifiability and Navigation Strategy

Part III: Implications

Chapter 8. Delegation and Control Revisited

Chapter 9. Conclusion – Implications for the Aid Industry & Beyond

Appendices

Appendix I: Data Collection

Appendix II: Additional Econometric Analysis

Bibliography

YouTube presentation by the author: https://www.youtube.com/watch?reload=9&v=bdjeoBFY9Ss

Snippet from video: Errors arising from too much or too little control can be seen or unseen. When control is too little, errors are more likely to be seen. People do things they should not have done. When control is too much, errors are likely to be unseen, people don’t do things they should have done. Given this asymmetry, and other things being equal, there is a bias towards too much control

Book review: By Duncan Green in his 2018 From Poverty to Power blog

Publishers blurb:

Foreign aid organizations collectively spend hundreds of billions of dollars annually, with mixed results. Part of the problem in these endeavors lies in their execution. When should foreign aid organizations empower actors on the front lines of delivery to guide aid interventions, and when should distant headquarters lead?

In Navigation by Judgment, Dan Honig argues that high-quality implementation of foreign aid programs often requires contextual information that cannot be seen by those in distant headquarters. Tight controls and a focus on reaching pre-set measurable targets often prevent front-line workers from using skill, local knowledge, and creativity to solve problems in ways that maximize the impact of foreign aid. Drawing on a novel database of over 14,000 discrete development projects across nine aid agencies and eight paired case studies of development projects, Honig concludes that aid agencies will often benefit from giving field agents the authority to use their own judgments to guide aid delivery. This “navigation by judgment” is particularly valuable when environments are unpredictable and when accomplishing an aid program’s goals is hard to accurately measure.

Highlighting a crucial obstacle for effective global aid, Navigation by Judgment shows that the management of aid projects matters for aid effectiveness