Authors: Pete Barbrook-Johnson, Alexandra S. Penn

Highly commended, both for the content, and for making the whole publication FREE !!

Available in pdf form, as a whole or in sections here

Overview

-

- Provides a practical and in-depth discussion of causal systems mapping methods

- Provides guidance on running systems mapping workshops and using different types of data and evidence

- Orientates readers to the systems mapping landscape and explores how we can compare, choose, and combine methods

- This book is open access, which means that you have free and unlimited access

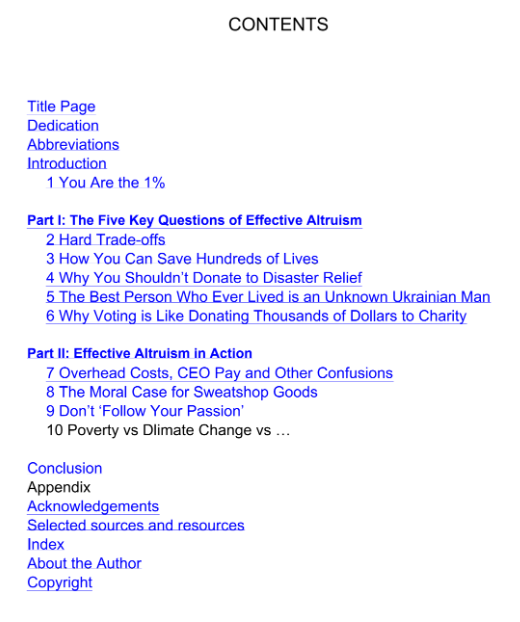

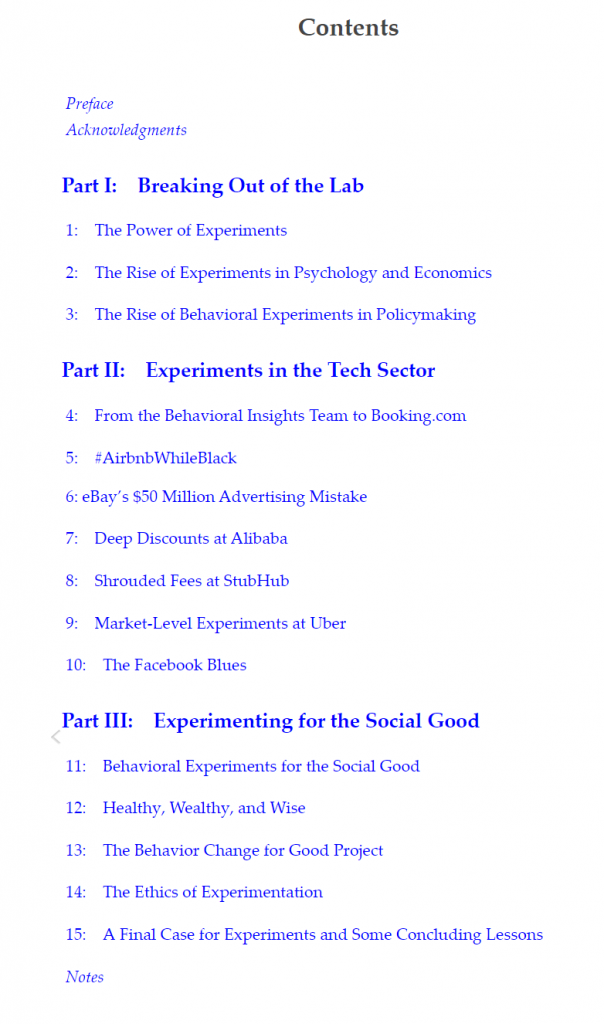

Contents:

Introduction Pete Barbrook-Johnson, Alexandra S. Penn Pages 1-19 PDF

Rich Pictures Pete Barbrook-Johnson, Alexandra S. Penn Pages 21-32 PDF

Theory of Change Diagrams Pete Barbrook-Johnson, Alexandra S. Penn Pages 33-46 PDF

Causal Loop Diagrams Pete Barbrook-Johnson, Alexandra S. Penn Pages 47-59PDF

Participatory Systems Mapping Pete Barbrook-Johnson, Alexandra S. Penn Pages 61-78 PDF

Fuzzy Cognitive Mapping Pete Barbrook-Johnson, Alexandra S. Penn Pages 79-95 PDF

Bayesian Belief Networks Pete Barbrook-Johnson, Alexandra S. Penn Pages 97-112 PDF

System Dynamics Pete Barbrook-Johnson, Alexandra S. Penn Pages 113-128 PDF

What Data and Evidence Can You Build System Maps From? Pete Barbrook-Johnson, Alexandra S. Penn Pages 129-143 PDF

Running Systems Mapping Workshops Pete Barbrook-Johnson, Alexandra S. Penn Pages 145-159 PDF

Comparing, Choosing, and Combining Systems Mapping Methods Pete Barbrook-Johnson, Alexandra S. Penn Pages 161-177 PDF

Conclusion Pete Barbrook-Johnson, Alexandra S. Penn Pages 179-182 PDF

Back Matter PDF