Report of a study commissioned by the Department for International Development

DFID Working Paper No. 40. By Rick Davies, August 2013. Available as pdf

See also the DFID website:https://www.gov.uk/government/

[From the Executive Summary] “The purpose of this synthesis paper is to produce a short practically oriented report that summarises the literature on Evaluability Assessments, and highlights the main issues for consideration in planning an Evaluability Assessment. The paper was commissioned by the Evaluation Department of the UK Department for International Development (DFID) but intended for use both within and beyond DFID.

The synthesis process began with an online literature search, carried out in November 2012. The search generated a bibliography of 133 documents including journal articles, books, reports and web pages, published from 1979 onwards. Approximately half (44%) of the documents were produced by international development agencies. The main focus of the synthesis is on the experience of international agencies and on recommendations relevant to their field of work.

Amongst those agencies the following OECD DAC definition of evaluability is widely accepted and has been applied within this report: “The extent to which an activity or project can be evaluated in a reliable and credible fashion”.

Eighteen recommendations about the use of Evaluability Assessments are presented here [in the Executive Summary], based on the synthesis of the literature in the main body of the report. The report is supported by annexes, which include an outline structure for Terms of Reference for an Evaluability Assessment.]

The full bibliography referred to in the study can be found online here: https://www.mande.co.uk/wp-content/uploads/2013/02/Zotero-report.htm

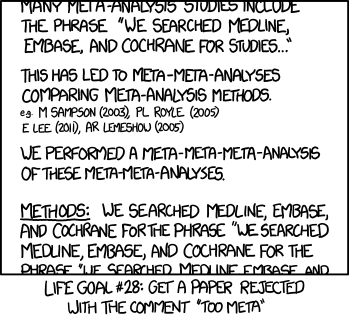

Postscript: A relevant xkcd perspective?